Tweet Sentiment Extraction - Final Blog

This is final blog for this NLP competition. We will discuss some caveats to move up through the leaderboard. We used the RoBERTa model in the midway blog for the infences. In this blog, we will discuss sentiment-specific predictions, noise of the data and post-processing tricks to improve the prediction scores.

Sentiment-specific Jaccard score

If we breakdown the average jaccard scores based on the sentiment, the average Jaccard values of the three sentiments are:

- Positive: 0.581

- Negative: 0.590

- Neutral: 0.976

Many tweets with positive and negative sentiment have a jaccard score of zero. Let us figure out the issues.

The Noise in labels & The Magic

At a first glimpse, those results look pretty weird as the selected texts look like random noise which are not a subset of the full text. For instance, cases found by DEBANGA RAJ NEOG:

-

Missing a

!Damn! Ithurts!!! -

Missing a

.It isstupid... -

Missing

dingood? LOL. It’s notgoo -

Missing

nginamazing? Dude. It’s notamaziat all!

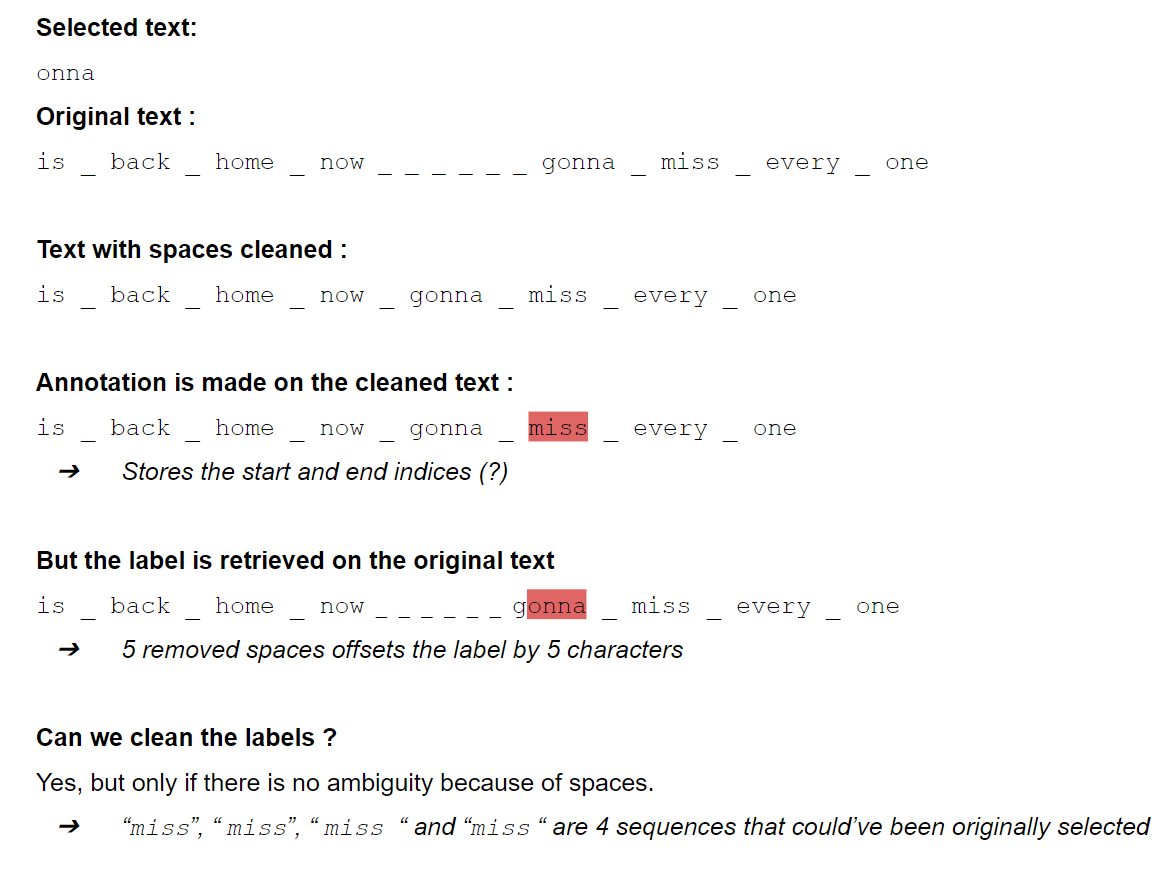

It was found that the noise originated from the consecutive spaces in the data. This insight can be leveraged to match the noisy selected text using the predicted probabilities of start and end indices at the token level and an alignment post-processing, which is called the Magic for this competition. This technique was implemented by the 1st place solution here, and found to be super helpful, which can increase the CV score by around 0.2. The implementation idea of the Magic is sketched at below.

Post-processing tricks

I campe up with a postprocessing method below which consistently helps to improve the CV score by about 0.001–0.002. This post-processing comprises of two tricks. The first one is to have a back-up indices with the second highest probabilities for both start and end indices of tokens, which will be used when the start indice is larger than the end indice. The code for the first trick is below.

The second trick deals with the special characters using the regex package, as shown in the function post_process.

a, a_bak= np.argsort(preds_start_avg[k,])[::-1][:2]

b, b_bak = np.argsort(preds_end_avg[k,])[::-1][:2]

if a>b:

if a_bak <= b and a > b_bak:

st = tokenizer.decode(enc.ids[a_bak-2:b-1])

elif a_bak > b and a <= b_bak:

st = tokenizer.decode(enc.ids[a-2:b_bak-1])

elif a_bak <= b_bak:

st = tokenizer.decode(enc.ids[a_bak-2:b_bak-1])

else:

count_abn_2 += 1

st = full_text

import re

def post_process(x):

if x.startswith('.'):

x = re.sub("([\.]+)", '.', x, 1)

if len(x.split()) == 1:

x = x.replace('!!!!', '!')

x = x.replace('???', '?')

if x.endswith('...'):

x = x.replace('..', '.')

x = x.replace('...', '.')

return x

else:

return x

Moreover, I submitted results with the highest local CV score rather than the one with the highest public leaderboard score. Luckily I survived the huge shakeup in the end. I ended up with a solo silver medal for this competition, ranking 90th place out of 2225 teams in total.